Anyscale Launches Endpoints Fine-Tuning

The Open-Source LLM community is quickly getting more options for fine-tuning LLM models.

Hey Everyone,

I’ve been really impressed by the startup Anyscale, who have recently announced Anyscale Endpoints - fine-tuning.

Anyscale Endpoints supports fine-tuning

If you think about how this is getting cheaper in the Open-source space, it’s going to be pretty astounding how accessible this becomes in the 2020s.

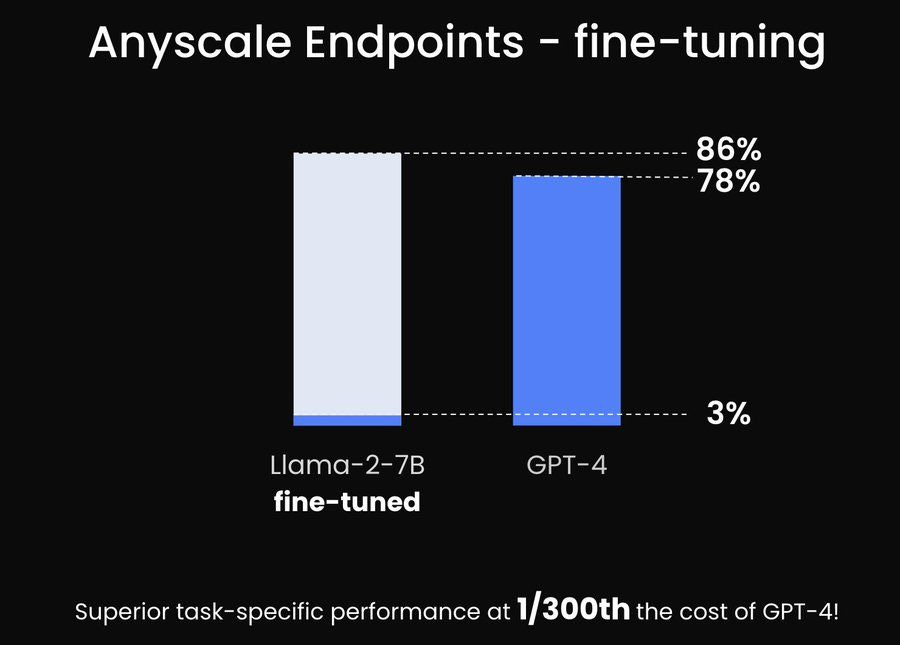

Their LLM fine-tuning API. Fine-tuning is essential for cost reduction. Fine-tuning can enable task-specific performance superior to GPT-4 at 1/300th the cost.

Anyscale *Private* Endpoints

Private Endpoints let's you deploy the entire backend of the LLM API (including the models) *in your cloud*, *private* for your business, and 100% *customizable*. This is a unique product on the market.

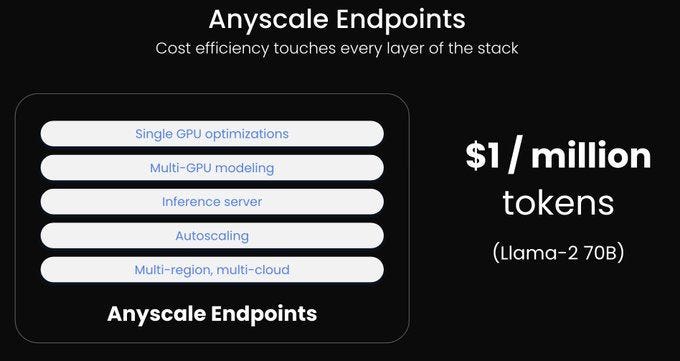

$1 per million tokens

Anyscale Endpoints charges $1 / million tokens for Llama-2-70B (and less for other models). We optimize every layer of the stack to make this possible. This is the *lowest price point* for Llama-2-70B.

These offerings build on everything they have been developing with Ray over the past few years as well as all of the advances in the open source community.

Anyscale’s goal is to provide the most performant and cost-efficient infrastructure for open LLMs.

Anyscale built a n pen-source distributed computing platform Ray, and is now launching a new service called Anyscale Endpoints and their new API, that it says will help developers integrate fast, cost-effective and scalable large language models into their applications. I got to admit, I find this really quite impressive.

It has to be more affordable and while companies like OpenAI are charging too much without adequate control, customization, privacy and cost efficiency, others are building stuff that can offer those things.

With the launch of Anyscale Endpoints, developers now have a simple way to build distributed applications that leverage the most advanced generative AI capabilities using the application programming interfaces of popular LLMs such as OpenAI LP’s GPT-4.

I’m a huge fan of what teams like Anyscale, Cohere, AI21 Labs and others are doing in the space.

Here's a quick glimpse at what Anyscale Endpoints offers:

State-of-the-art open-source and proprietary performance and cost optimizations.

A serverless approach to running open-source LLM models, mitigating infrastructure complexities.

A seamless transition for running base or fine-tuned models on your cloud.

Streaming response.

Utilization of the power of Ray Serve and Ray Train libraries.

It’s really something I hope startups and smaller enterprise and SMBs can experiment with.

Additional services from the Anyscale Platform:

If you need to fine-tune and deploy models on Anyscale's cloud infrastructure, our platform offers a comprehensive suite of services.

For a more secure, customized environment, the Anyscale platform provides the necessary resources and support for fine-tuning and deploying LLMs in your own cloud managed by Anyscale's cloud infrastructure.