Nvidia's AI Chip is for Real

Nvidia's Omniverse is taking industrial collaboration into the Cloud

Nvidia recently had its conference, so this will be a bit about that.

The PR of most tech companies is boring compared to the Nvidia hype. I really like it. Nvidia has announced a slew of AI-focused enterprise products at its annual GTC conference.

The Tech conferences are mostly boring to me, but Nvidia’s plans feel impactful: the details of its new silicon architecture, Hopper; the first datacenter GPU built using that architecture, the H100; a new Grace CPU “superchip”; and vague plans to build what the company claims will be the world’s fastest AI supercomputer, named Eos.

Mysterious!

Dark!

The future of AI hardware and AI supercomputers.

If you think about how AI has matured, Nvidia’s chips have had perfect timing and driven so much of what is now possible.

Nvidia has benefited hugely from the AI boom of the last decade, with its GPUs proving a perfect match for popular, data-intensive deep learning methods.

“A new type of data center has emerged — AI factories that process and refine mountains of data to produce intelligence,” said Jensen Huang, founder and CEO of NVIDIA.

Nvidia has created a mythology around how key data-centers and better chips have become. And to some degree, I’m a believer.

“The Grace CPU Superchip offers the highest performance, memory bandwidth and NVIDIA software platforms in one chip and will shine as the CPU of the world’s AI infrastructure.”

As the AI sector’s demand for data compute grows, says Nvidia, it wants to provide more firepower.

I really want this Newsletter called Datascience Learning Center, to ultimately live up to the name. If you think this could be a valuable resource to others as well, consider giving me a tip.

In particular, the company stressed the popularity of a type of machine learning system known as a Transformer. This method has been incredibly fruitful, powering everything from language models like OpenAI’s GPT-3 to medical systems like DeepMind’s AlphaFold.

I also find myself talking about that too.

Indeed well, Such models have increased exponentially in size over the space of a few years. When OpenAI launched GPT-2 in 2019, for example, it contained 1.5 billion parameters (or connections). When Google trained a similar model just two years later, it used 1.6 trillion parameters.

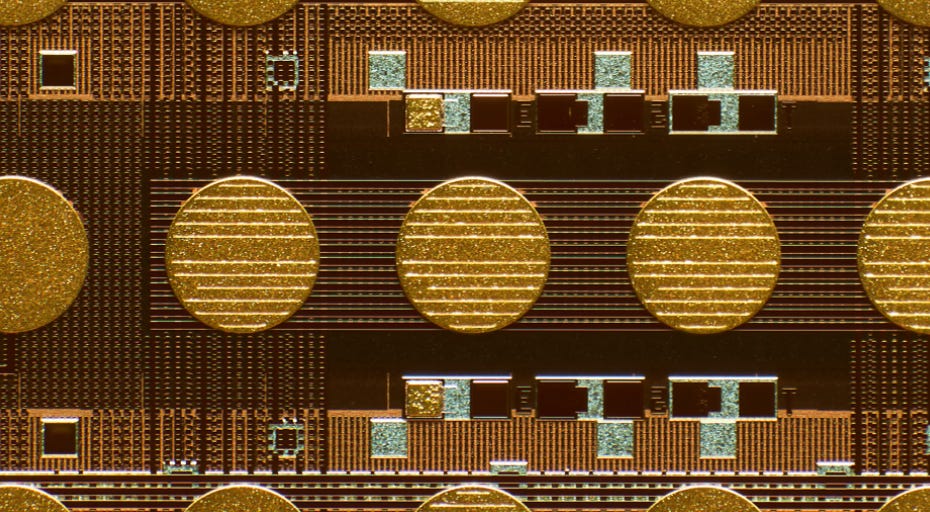

At the recent show, Nvidia announced its new H100 AI processor, a successor to the A100 that's used today for training artificial intelligence systems to do things like translate human speech, recognize what's in photos and plot a self-driving car's route through traffic.

Omniverse as Industrial Collaboration in the Cloud

Nvidia’s conception of the Omniverse puts other companies to shame in how they are approaching the Metaverse.

With the NVIDIA Omniverse real-time design collaboration and simulation platform, game developers can use AI- and NVIDIA RTX™-enabled tools, or easily build custom ones, to streamline, accelerate and enhance their development workflows.

Nvidia has essentially launched a Data-Center-Scale Omniverse Computing System for Industrial Digital Twins. Let that sink in.

“Physically accurate digital twins are the future of how we design and build,” said Bob Pette, vice president of Professional Visualization at NVIDIA. “Digital twins will change how every industry and company plans.

In 2022, everything from Synthetic AI to digital twin technology is really trending. And I consider it way more important for the future of technology than NFTs or Web 3.0 hype. How we simulate, create and collaborate is changing thanks to A.I. and the sort of hardware Nvidia is building.

Nvidia says its new Hopper architecture facilitates transformers, the architecture is specialized to accelerate the training of Transformer models on H100 GPUs by six times compared to previous-generation chips.

AI-chips

Transformers

Digital Twins

Omniverse in Industrial design

Hopper demonstrates NVIDIA’s successful transition from a GPU that also does AI, to a compute accelerator that also does Graphics.

The H100 GPU itself contains 80 billion transistors and is the first GPU to support PCle Gen5 and utilize HBM3, enabling memory bandwidth of 3TB/s.

NVIDIA is working with leading HPC, supercomputing, hyperscale and cloud customers for the Grace CPU Superchip. Both it and the Grace Hopper Superchip are expected to be available in the first half of 2023.

If you prefer to read my work on an iOS device instead of in an Email inbox you can do so:

Nvidia’s approach to the Omniverse will really be able to massive B2B collaboration in innovation I think.

NVIDIA Omniverse is built from the ground up to be easily extensible and customizable with a modular development framework.

Much to do about A.I. - Not Just Chips

NVIDIA delivers real-world solutions today based on digital twin technology and collaboration. This is probably the best intersection of the Cloud and the Metaverse we have today from a technical standpoint. So yeah, Nvidia with capitals, that’s okay.

“For the training of giant Transformer models, H100 will offer up to nine times higher performance, training in days what used to take weeks,” said Kharya.

Omniverse and Transformers, Nvidia has found a way to be the AI-everything company in 2022.

So Nvidia’s major updates to its NVIDIA AI platform, a suite of software for advancing such workloads as speech, recommender system, hyperscale inference and more, which has been adopted by global industry leaders such as Amazon, Microsoft, Snap and NTT Communications.

Nvidia also announced updates to its various enterprise AI software services, including Maxine (an SDK to deliver audio and video enhancements, intended to power things like virtual avatars) and Riva (an SDK used for both speech recognition and text-to-speech).

The company also teased that it was building a new AI supercomputer, which it claims will be the world’s fastest when deployed. The supercomputer, named Eos, will be built using the Hopper architecture and contain some 4,600 H100 GPUs to offer 18.4 exaflops of “AI performance.” It’s hard to doubt Nvidia’s ambition at this point, it’s tethered itself to the very future of A.I. in the 2020s.

The Dominance of the Nvidia GPU in A.I.

In 2019, NVIDIA GPUs were deployed in 97.4 per cent of AI accelerator instances – hardware used to boost processing speeds – at the top four cloud providers: AWS, Google, Alibaba and Azure. It commands “nearly 100 per cent” of the market for training AI algorithms, says Karl Freund, analyst at Cambrian AI Research.

Nearly 70 per cent of the top 500 supercomputers use its GPUs. Virtually all AI milestones have happened on NVIDIA hardware.

Ng’s YouTube cat finder, DeepMind’s board game champion AlphaGo, OpenAI’s language prediction model GPT-3 all run on NVIDIA hardware. It’s the ground AI researchers stand upon.

Over the past few years, a number of companies with strong interest in AI have built or announced their own in-house “AI supercomputers” for internal research, including Microsoft, Tesla, and Meta. But those aren’t primarily A.I. companies. Although they can pretend that they are.

How Fast Will Eos Be?

During his keynote address, Nvidia CEO Jensen Huang did say that Eos, when running traditional supercomputer tasks, would rack 275 petaFLOPS of compute — 1.4 times faster than “the fastest science computer in the US” (the Summit).

“We expect Eos to be the fastest AI computer in the world,” said Huang. “Eos will be the blueprint for the most advanced AI infrastructure for our OEMs and cloud partners.”

I like Jensen Huang’s optimism and vision, always have. Because he actually knows A.I. is a thing worth cheering for.

“NVIDIA AI is the software toolbox of the world’s AI community — from AI researchers and data scientists, to data and machine learning operations teams,” said Jensen Huang, founder and CEO of NVIDIA. “Our GTC 2022 release is massive. Whether it’s creating more engaging chatbots and virtual assistants, building smarter recommendations to help consumers make better purchasing decisions, or orchestrating AI services at the largest scales, your superpowered gem is in NVIDIA AI.”

If you enjoy this read, give it a like and consider tipping, patronage or community support. This Newsletter only exists due to direct support from the small audience I have gathered from places like Reddit, LinkedIn and Google Search.