What are Graph Neural Networks?

Graph Neural Networks are just getting started for A.I's impact on science.

Hey Guys,

So what is a Graph Neural Network again? GNNs apply the predictive power of deep learning to rich data structures that depict objects and their relationships as points connected by lines in a graph.

Kumo, a startup offering an AI-powered platform to tackle predictive problems in business, today announced that it raised $18 million in a Series B round led by Sequoia, with participation from A Capital, SV Angel and several angel investors.

Kumo’s platform works specifically with graph neural networks, a class of AI system for processing data that can be represented as a series of graphs. Graphs in this context refer to mathematical constructs made up of vertices (also called nodes) that are connected by edges (or lines). Graphs can be used to model relations and processes in social, IT and even biological systems. In this sense, I believe they will intersect with quantum computing and biotechnology and advanced chemistry.

Quantum Chemistry you say? How so? Most of the current neural network models in quantum chemistry (QC) exclude the molecular symmetry and separate the well-correlated real space (R space) and momenta space (K space) into two individuals, which lack the essential physics in molecular chemistry. In this work, by endorsing the molecular symmetry and elementals of group theory, we propose a comprehendible method to apply symmetry in the graph neural network (SY-GNN), which extends the property-predicting coverage to orbital symmetry for both ground and excited states.

So this is more important than many realize, think about it, today, developers are applying AI’s ability to find patterns to massive graph databases that store information about relationships among data points of all sorts. Together they produce a powerful new tool called graph neural networks.

According to Nvidia, Graph neural networks (GNNs) apply the predictive power of deep learning to rich data structures that depict objects and their relationships as points connected by lines in a graph.

In GNNs, data points are called nodes, which are linked by lines — called edges — with elements expressed mathematically so machine learning algorithms can make useful predictions at the level of nodes, edges or entire graphs.

What Can GNNs Do?

An expanding list of companies is applying GNNs to improve drug discovery, fraud detection and recommendation systems. These applications and many more rely on finding patterns in relationships among data points.

Researchers are exploring use cases for GNNs in computer graphics, cybersecurity, genomics and materials science. A recent paper reported how GNNs used transportation maps as graphs to improve predictions of arrival time.

GNNs could become more important as A.I. evolves in science and healthcare as well. Lots of learning tasks require dealing with graph data which contains rich relation information among elements. Modeling physics systems, learning molecular fingerprints, predicting protein interface, and classifying diseases demand a model to learn from graph inputs. In other domains such as learning from non-structural data like texts and images, reasoning on extracted structures (like the dependency trees of sentences and the scene graphs of images) is an important research topic which also needs graph reasoning models.

In short, many branches of science and industry already store valuable data in graph databases. With deep learning, they can train predictive models that unearth fresh insights from their graphs.

It turns out I wrote about Graph Neural Networks back in April on this Newsletter, I didn’t even realize. It discovered even some researchers in Quantum computing are looking into it as it relates to the future of simulations and predictions there. The commercial viability is also pretty high, going back to Kumo.AI and what they will specialize in doing for enterprise customers.

Graph neural networks have powerful predictive capabilities. At Pinterest and LinkedIn, they’re used to recommend posts, people and more to hundreds of millions of active users. But at present they are computationally expensive to run — making them cost-prohibitive for most companies.

Graph neural networks (GNNs) are neural models that capture the dependence of graphs via message passing between the nodes of graphs. In recent years, variants of GNNs such as graph convolutional network (GCN), graph attention network (GAT), graph recurrent network (GRN) have demonstrated ground-breaking performances on many deep learning tasks.

Indeed GNNs feel like tapping into some universal language found in nature.

So much of our knowledge in the scientific domain is expressed in graphs, nodes and the relationships between them. Think about it, A.I. in this domain has tremendous potential.

Graph neural networks (GNNs) are neural models that capture the dependence of graphs via message passing between the nodes of graphs.

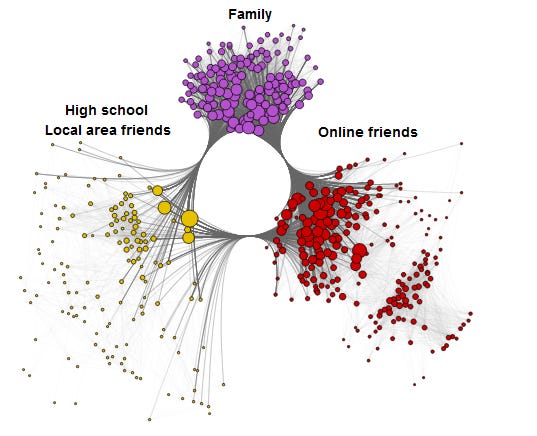

A graph can represent things like social media networks, or molecules. Think of nodes as users, and edges as connections. A social media graph might look like this:

If you think of nodes as customers, and edges as variables in how those customers interact with your product, GNNs have a lot of potential to optimize these relationships.

Kumo remains in the pilot stage but the startup has raised now already $37 million dollars. The predictive power of A.I. is only going to get better once GNNs become cheaper and their utility becomes tailored to different use cases. Clearly this may have some interesting intersections with quantum computing in the future, when those computers are more scaled.

Graph databases have grown from lending themselves to specific use cases such as charting social relationships to supporting a new generation of security and customer-360 solutions. Research firm Gartner has predicted they will drive a trend that will shape data and analytics (D&A) for years to come

We live in a world permeated by relationships of things to other systems.

This reminds me of computational biology and the future of genomics too. Graphs are a kind of data structure which models a set of objects (nodes) and their relationships (edges). Recently, researches on analyzing graphs with machine learning have been receiving more and more attention because of the great expressive power of graphs, i.e. graphs can be used as denotation of a large number of systems across various areas including social science (social networks (Wu et al., 2020), natural science (physical systems (Sanchez et al., 2018; Battaglia et al., 2016) and protein-protein interaction networks (Fout et al., 2017)), knowledge graphs (Hamaguchi et al., 2017) and many other research areas (Khalil et al., 2017).

Recently, some Amazon researchers created a novel method for suggesting related products utilizing directed graphs and graph neural networks. The team started deploying this model in production after the study was presented at this year’s European Conference on Machine Learning (ECML). On comparing model predictions to real customer co-purchases using two performance metrics, HitRate and mean reciprocal rank, the team showed that their method surpassed state-of-the-art baselines by 30% to 160%. They proposed a solution called DAEMON, a Novel Graph Neural Network based Framework for Related Product Recommendation.

I therefore think Kumo.AI could eventually be acquired by someone like Amazon. Amazon Neptune is a managed graph database product published by Amazon.com. It is used as a web service and is part of Amazon Web Services. A lot of A.I-as-a-Service is occurring on the Cloud. First announced at Amazon's re:Invent conference in 2017, Neptune went into general availability in May 2018. The fundamental difficulty with employing graph neural networks (GNNs) for related-product recommendation is that there exist asymmetric relationships between the items.

GNNs are “catching fire because of their flexibility to model complex relationships, something traditional neural networks cannot do,” said Jure Leskovec, an associate professor at Stanford (listen above).

While they are used by Amazon in fraud detection and by LinkedIn in social recommendations, clearly they have a lot more utility to be unlocked than that.

“GNNs are general-purpose tools, and every year we discover a bunch of new apps for them,” said Joe Eaton, a distinguished engineer at NVIDIA who is leading a team applying accelerated computing to GNNs. “We haven’t even scratched the surface of what GNNs can do.”

Interesting stuff for the future.