Hey Guys,

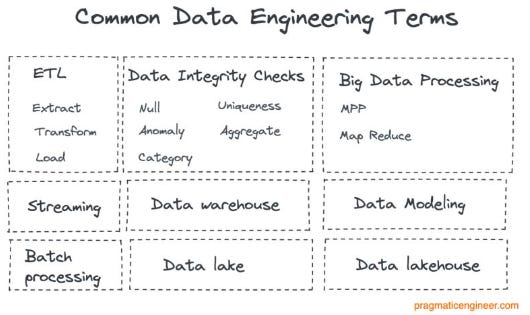

Data Engineering terms are very interesting especially for prospective data scientists.

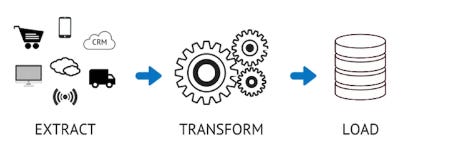

So If you’re reading this, you’ve probably heard the term “ETL” thrown around in relation to data, data warehousing, and analytics. It stands for “Extract, Transform, and Load.”

I was curious about its history.

ETL has roots in the 1970s and the rise of centralized data repositories. But it wasn’t until the late 1980s and early 1990s, when data warehouses took center stage, that we saw the creation of purpose-built tools to help load data into these new warehouses.

ETL is a process that extracts, transforms, and loads data from multiple sources to a data warehouse or other unified data repository. For more terms you can go here.

A typical ETL process collects and refines different types of data, then delivers the data to a data lake or data warehouse such as Redshift, Azure, or BigQuery.

Top ETL Tools in 2022

IBM DataStage

Integrate.io

Fivetran

Oracle Data Integrator

SAS Data Management

Talend Open Studio

Azure Data Factory

Pentaho Data Integration

Hadoop

Stitch

Dataddo

Singer

AWS Glue

Google Cloud Dataflow

Informatica PowerCenter

Skyvia

Data Warehousing Tools

The idea behind the data warehousing technique is to collect and manage data from varied sources to provide meaningful business insights to the user. A large amount of information is stored electronically by a business that, instead of transaction processing, is designed for query and analysis. Data warehousing is a process of transforming data into useful information and making it available to users for analysis.

Data Acquisition and Data Cleansing Tools

IBM Datacamp

Gartner Magic Quadrant

This Gartner report helps decision makers understand what’s needed to integrate complex data ecosystems and select data integration vendors that meet their specific needs.

ETL tools also makes it possible to migrate data between a variety of sources, destinations, and analysis tools. As a result, the ETL process plays a critical role in producing business intelligence and executing broader data management strategies.

Volumes of data can be extracted from a wide range of data sources, including:

Existing databases and legacy systems

Cloud, hybrid, and on-premises environments

Sales and marketing applications

Mobile devices and apps

CRM systems

Data storage platforms

Data warehouses

Analytics tools

WHY IS ETL IMPORTANT?

A lot of people ask, now that we’re in the cloud, why do we need ETL? Is it still important?

Productivity: It improves productivity with repeatable processes

Context: ETL helps businesses gain deep historical reference and context with data.

Accuracy: ETL improves data accuracy and audit capabilities for regulations and standards.

Consolidation: ETL provides a consolidated view of data for easier and improved analysis and reporting.

Anyone who is into Data Analytics, be it a programmer, business analyst or database developer, has been developing ETL pipeline directly or indirectly.

ETL History Continued

In the late 1980s and early 1990s, data warehouses came onto the scene. A distinct type of database, data warehouses provided integrated access to data from multiple systems – mainframe computers, minicomputers, personal computers and spreadsheets. But different departments often chose different ETL tools to use with different data warehouses. Coupled with mergers and acquisitions, many organizations wound up with several different ETL solutions that were not integrated.

Today in 2022, Core ETL and ELT tools work in tandem with other data integration tools, and with various other aspects of data management – such as data quality, data governance, virtualization and metadata.

ETL is closely related to a number of other data integration functions, processes and techniques. Understanding these provides a clearer view of how ETL works.

Think about it:

SQL

Scripts

Data mapping

Data quality

Scheduling and processing

Batch processing

Web services

Master data management

Data virtualization

Event stream processing and ETL

Image Credit: Aaron Zhu

Don’t confuse ETL with a tool, it’s a concept of data movement which can be established with the help of various tools. Those tools keep changing as the MDS keeps pivoting to better ways of handling data science and business objectives.

I’ve even heard of ETL roles. An ETL Developer is usually a Software Engineer that handles the Extraction, Transformation, and Loading data processes by developing infrastructures to do this efficiently.

ETL is the Backbone of Business Intelligence

Finding ways and methods to harness information is the duty of the Data Engineering team and specifically the ETL Engineer who is also known as the ETL Developer.

Data Scientists, Data Analysts, and Data Engineers are employed to unearth this valuable information in the advent of the Cloud, Big Data and Data science as a whole.

This process is known as Extract, Transform, and Load (ETL) which is the backbone of Business Intelligence (BI) as the data can not be used in its raw format to get actionable information.

This data may be obtained from transactional applications such as Customer Relational Management (CRM), Enterprise Resource Planning (ERP), Relational Database, XML, JSON, third party, and others.

Defined standards and models are used in Cleansing, Mapping, and Augmenting the data to prevent bad and non-matching data in the designated repository.

The final stage of the ETL process is Loading, which is uploading the refined data into the repository location such as Data Warehouses where they are secured, shared across users and departments both within or outside the organization.

The Rise of Data Engineering

Data Engineering is the broad spectrum of the team issued with the responsibilities of obtaining raw data, developing infrastructures, building and testing the Data Pipelines to optimize a system for Analytical Purposes.

Many polls I’ve seen show that Data Engineers are more in demand than data scientists and other related terms and positions. That being said new terms and positions are constantly being refined and re-defined. Generally speaking:

The Data Engineering team is usually large and may comprise all or some of the following depending on the scope of the project to be executed by an enterprise:

Data Architect

Database/Warehouse Developer

Database Administrator

Data Scientists

Business Intelligence Developer

ETL Engineer

Anyways guys I hope you found this basic run-through somewhat helpful even if it’s mostly re-hashing what you already know. I'm curious about many educational aspects around data science and how the Cloud and the Modern Data Stack have changed how we do things.

If Data is the new oil in the age of A.I., what comes next?

I’ll leave you with an interesting way of looking at the world.

Hierarchy of Needs from the perspective of Data Science

Source: Monica Rogati