Why Techno-Pessimists Always Win

OpenAI's 5 stages to AGI decoded. 🍓 Strawberry models it turns out, are sort of expensive!

Your favorite cybernetic villain from the upcoming Marvel movie, Sam Altman.

Hey Everyone,

Recently and as if suddenly, analysts and economists have begun to question the Generative AI bull market narrative.

I even went viral on Threads due to this.

Goldman Sachs: AI Is Overhyped, Wildly Expensive, and Unreliable

The truth is reversion to the mean is the norm in emerging tech. What do I mean by this? The marketing is always a fraud. Microsoft backed OpenAI have cornered the market enough that they no longer care how people view them, with enough ChatGPT subscriptions to all retire wealthy.

I think it was the Goldman Sachs report that really seems to have turned the dial on the Techno-Optimists.

A subreddit I was previously banned on when I had a Substack URL, I was suddenly featured on on the top page. Listen, it’s not like I’m the only skeptic around.

Ed Zitron also has a thorough writeup of the Goldman Sachs report over at Where's Your Ed At.

Jim Covello, who is Goldman Sachs’ head of global equity research, meanwhile, said that he is skeptical about both the cost of generative AI and its “ultimate transformative potential.”

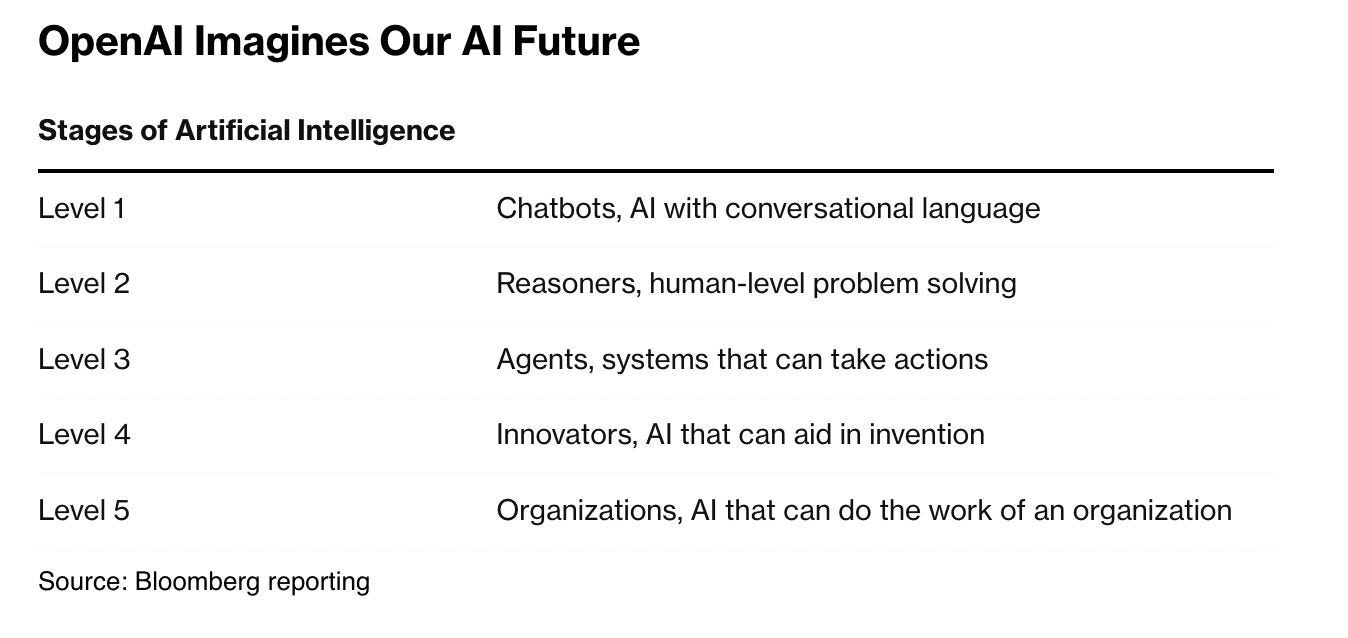

So let’s break down some of the pop-tech “scoops” and “leaks” that are really just OpenAI PR now:

OpenAI announced the new classification system at an employee meeting on Tuesday and plans to share it more widely soon, according to Bloomberg's source.

The five levels are:

Level 1: Chatbots, natural language

Level 2: Reasoners, can apply logic and solve problems at a human level

Level 3: Agents, can perform additional actions

Level 4: Innovators, can make new inventions

Level 5: Can do the work of an entire organization

OpenAI “Imagines” AGI somewhere - out there!

It’s a five-level system; a bit like Maslow’s Hierarchy of Needs, if you happen to be a robot. And it’s not clear what this has to do with AGI, at all actually.

OpenAI's founding mission is to “ensure that artificial general intelligence (AGI) benefits all of humanity.” And the company defined AGI as an autonomous system that “outperforms humans at most economically valuable work.”

It seems to me OpenAI’s actual mission is just to make money on suckers who think ChatGPT is useful. Or relatively speaking, more useful than Claude 3.5 (which it isn’t by the way). To make shiny products to pay back Microsoft and in a get rich scheme that has more to do with crypto mafia pyramid schemes, than perhaps real innovation.

Now of course, there is no universally agreed definition of Artificial General Intelligence (AGI), but OpenAI's charter describes it as AI that can “surpass humans in most economically valuable tasks”, which corresponds to level 5. That doesn’t sound much like “generalized learning” that a true AGI would be capable of.

While OpenAI is somehow valued at $80 Billion (likely over $100 Bn by now), it’s internal troubles just continue to mount and stack up. A WP exclusive recently said that an anonymous source claimed OpenAI rushed through safety tests and celebrated its product before ensuring their safety. That’s not what you want to hear if you are an Enterprise firm or individual trying to consider if ChatGPT Pro (ChatGPT Plus) is worth it.

Grifting Generational Startups and Machine Reasoning

OpenAI internally believes it’s on the cusp of the 2nd stage (of five), which it calls “Reasoners.” This refers to systems that can do basic problem-solving tasks as well as a human with a doctorate-level education who doesn’t have access to any tools.

But if you cared about trust & saftey, you wouldn’t be comparing token predictors with people, much less doctorate-level educated folk. Not only is it reductionistic but it’s borderline fraudulent.

OpenAI Thinks it will dominate Agentic AI

OpenAI defines Level 3 (“Agents”) as systems that can spend several days acting on a user’s behalf and Level 4 as AI that can develop innovations, per the report.

Q*, now called Strawberry models, is supposed to be at the stage of making this happen. Even as more folk are using Claude 3.5 Artifacts and Projects.

OpenAI has said for years that it’s working to build what’s often referred to as artificial general intelligence, or AGI — essentially, computers that can do a better job on most tasks than people. Sam Altman has often insinuated this was “close”. But if it’s only at stage 1.5 out of 5, it’s getting more obvious that this is a very long-term and distant dream.

The last Microsoft mafia leader basically confirmed this for us recently:

OpenAI has previously stated that AGI—a nebulous term for a hypothetical concept is the primary goal of the company. Maybe, but Microsoft’s Copilot Era is also about making money and they make money when OpenAI makes money. You might recall, in case you are fuzzy on the details and I don’t blame you, Microsoft’s infusion of funding would be part of a complicated deal in which the company would get 75% of OpenAI’s profits until it recoups its investment and OpenAI wins back its independence so to speak. Nearly two years later you have to imagine that OpenAI is making heady progress to that end.

How Profitable is ChatGPT Plus?

It turns out, ChatGPT Plus, the premium version of OpenAI's popular chatbot, emerged as the primary revenue driver, contributing $1.9 billion of the $3.4 Bn revenue. That’s about 56% of OpenAI’s revenue.

OpenAI has been called a “generational company” by the likes of Casey Newton, who is suddenly an expert on OpenAI’s platform. Meanwhile OpenAI’s success in revenue is choking the rest of the Generative AI startups and tools who are barely making any money in comparison, outside of Anthropic. Who themselves had to sell out to Amazon and Google, just in order to compete.

According to 404 Media, the Goldman Sachs report comes on the heels of a piece by David Cahn, partner at the venture capital firm Sequoia Capital, which is one of the largest investors in generative AI startups, titled “AI’s $600 Billion Question,” which attempts to analyze how much revenue the AI industry as a whole needs to make in order to simply pay for the processing power and infrastructure costs being spent on AI right now. But the problem is the Generative AI bubble is now based on Nvidia’s market cap which is not likely to burst anytime soon - so that 600 Bn. question in 2025 will be a 1 Tr dollar question.

Generative AI might be the biggest ponzi scheme of all time outside of Bitcoin.

Goldman Sachs put out a 31-page-report (titled "Gen AI: Too Much Spend, Too Little Benefit?”) that includes some of the most damning literature on generative AI I've ever seen. If you like economists and analysts telling you what any person on the street could have told you about their mistrust of AI products.

All of this to say, WTF OpenAI.

Levels of AGI Non-Sense

In a November 2023 paper, several researchers at Google DeepMind proposed a framework of five ascending levels of AI, including tiers such as “expert” and “superhuman.” The rankings resemble a system often referred to in the automotive industry in order to assess the degree of automation for self-driving cars.

Now it seems OpenAI’s comms team thought it would be a good idea to publish their own version of this. It’s probably doctored by Copilot Era sensibilities of their sponsors, Microsoft.

Too Much Spend, Too Little Benefit?

Hierarchy of AGI is a “Work in Progress”

All to say that things are getting really weird at OpenAI HQ who also want to expand to Seattle.

According to the levels OpenAI has come up with, the third tier on the way to AGI would be called “Agents,” referring to AI systems that can spend several days taking actions on a user’s behalf. Level 4 describes AI that can come up with new innovations. And the most advanced level would be called “Organizations.”The levels were put together by executives and other senior leaders at OpenAI, and it is considered a work in progress.

This in an era where they tried to convince us GPT and ChatGPT was the start of a General purpose technology (also GPT).

The Strawberry project was formerly known as Q*, which Reuters reported last year was already seen inside the company as a breakthrough.

Maybe strawberries are what Sam Altman had for breakfast the day the old board at OpenAI fired him for “dishonesty”. We never assumed Sam was the honest sort.

When a company starts making promises, you know its the beginning of the end. Microsoft CTO Kevin Scott recently said that next-generation AI could pass PhD qualification tests. OpenAI CTO Mira Murati predicts PhD-level AI will happen in 1.5 years. But if your tools is at level 1 of 5, it implies at least a decade in normal human time, no? Erm, maybe several decades to never, in reality.

OpenAI leaders also showed employees a research project with GPT-4 that demonstrated it has human-like reasoning skills. I’m not sure what this company’s definition of “reasoning” is either. I’ve been listening to boosters like Ethan Mollick rant about academic papers that support this sort of position for longer my skepticism could tolerate.

The promise of generative AI technology to transform companies, industries, and societies is leading tech giants and beyond to spend an estimated ~$1tn on capex in coming years, including significant investments in data centers, chips, other AI infrastructure, and the power grid. But this spending has little to show for it so far. Read More.

How can you even trust products from such a company as OpenAI? Who now work with the Pentagon, have an NSA former leader on the board and illegally prevented whistleblowing with restrictive NDAs? OpenAI recently even partnered with with Lab that built the atomic bomb. If OpenAI is working on military weapons and cybersecurity, what is China doing with the technology do you suppose even with their superior open-source LLMs?

🍓 We’re going to need a lot more than strawberries Sam.

As reported first by Rachel Metz from Bloomberg, this roadmap outlines the key steps and metrics OpenAI will use to measure its advancements towards AGI. The scoop basically consists of being fed PR by OpenAI’s Comms team. It depicts a hypothetical meeting between C-level OpenAI folk and the drones of their company. Without providing any details.

Oddly Goldman Sachs interviews with various experts is far more convincing of the opposite conclusion that Generative AI cannot possibly deliver ROI.

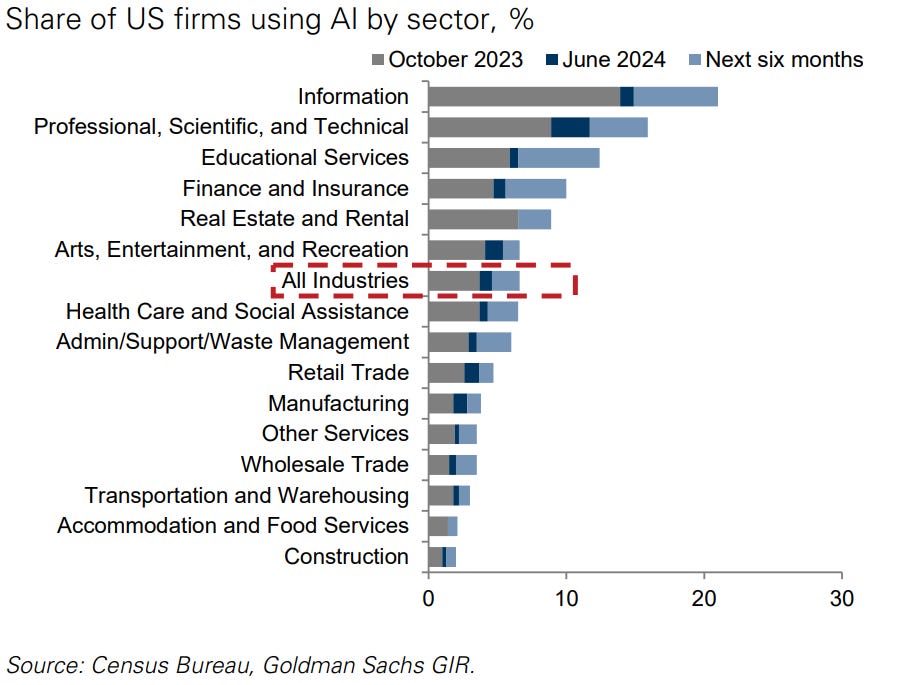

Ideas that Generative AI would disrupt millions of jobs, bump up productivity or increase GDP now seem almost laughable just 19 months after ChatGPT went live. Goldman Sachs among others made some economic predictions that never made a whole lot of sense.

OpenAI Chief Executive Officer Sam Altman has previously said he expects AGI could be reached this decade. But Google, xAI and others will see about that as well. Meanwhile it appears like the absurd first-mover advantage of Microsoft’s Copilot Era and ChatGPT will have to contend with some mean reversion in the second half of 2024. Even as hype around GPT-4 feels like a distant memory. Hype around GPT-5 is for the most part, nowhere to be seen.

Generative AI and the Arc of Diminishing Returns

It’s one thing if ChatGPT was unique, but there are so many chatbots, frontier models and companies and even China now reaching parity. If I had to guess, just like in the mobile era, China is better than the U.S. at building applications that are utility driven for consumers, even if America is better at the Enterprise AI stuff.

As OpenAI’s star-culture begins to fade, with controversies and lawsuits, and an enormous exodus of talent, we have to begin to assume that Microsoft’s bet on Generative AI was a short-term gamble. OpenAI is also an on-going competitor to Microsoft’s own Copilot Era for future revenue, after their debt has been paid. Meanwhile Google and even Apple and Amazon will make real headway with the technology, even if late in comparison.

The lack of details about OpenAI’s Q* and Strawberry models is also concerning. Judging by GPT-4o’s product launch announcements, OpenAI appears to be on the decline relative to their competition. Generative AI may never show the ROI that has already been pumped into it by BigTech, Nvidia orders and Venture Capital funds already just in 2023 and 2024.

The OpenAI Mafia and Endless Spinouts

Safety issues loom large at OpenAI — and seem to just keep coming. Current and former employees at OpenAI recently signed an open letter demanding better safety and transparency practices from the startup, not long after its safety team was dissolved following the departure of cofounder Ilya Sutskever. A month ago, Ilya Sutskever, one of OpenAI’s co-founders, has launched a new company, Safe Superintelligence Inc. (SSI), just one month after formally leaving OpenAI.

xAI meanwhile with Elon Musk has gotten a monster funding that might make Grok, by the time it reaches Grok 3, a half-decent frontier model builder.

Whether this large spend will ever pay off in terms of AI benefits and returns, and the implications for economies, companies, and markets if it does—or if it doesn’t—is Top of Mind.

Is it feasible in a bear case that Generative AI never fulfills its potential in terms of utility , value, revenue generation or ROI in the big picture.

Meanwhile we have people saying Nvidia could reach $50 trillion in value.

James Anderson, an early investor in Amazon and Tesla, said the AI chipmaker could be worth $50 trillion in a decade.

Bloomberg lists OpenAI's five "Stages of Artificial Intelligence" as follows:

Level 1: Chatbots, AI with conversational language

Level 2: Reasoners, human-level problem solving

Level 3: Agents, systems that can take actions

Level 4: Innovators, AI that can aid in invention

Level 5: Organizations, AI that can do the work of an organization

However are chatbots, agents and tools capable of replacing people at their jobs society even wants or needs? Why is Microsoft and its hero startup pushing these things if we aren’t even sure we want them?

OpenAI's internal five-step plan for achieving Artificial General Intelligence (AGI) doesn’t provide a well thought out structured framework for tracking progress in AI development. It’s more likely that this has no real bearing even on how AGI or ASI would actually manifest. It’s quite possible GPT-4o made the entire thing up and is being fed to OpenAI employees and the public as some kind of academic vetting theory that’s backed by real research and peer-reviewed papers.

Now with OpenAI whistleblowers having urged the US financial watchdog (The FTC) to investigate non-disclosure agreements at the startup after claiming the contracts included restrictions such as requiring employees to seek permission before contacting regulators, the optics for OpenAI are very poor.

Elon Musk suggested that AI could surpass the intelligence of the smartest human beings as soon as 2025 or by 2026. But Elon Musk is hardly a trustworthy source given the decline in marketshare of Tesla and all the promises about its Robotaxi AI program of AVs. Which has thus far turned out to be an utter fabrication.

Any AI classification system raises questions about whether it's possible to meaningfully quantify AI progress and what constitutes an advancement, especially when and if the leading candidate is more likely to come out of China than the United States. OpenAI working with the Pentagon is a sign the U.S. already knows it’s losing this battle for AI Supremacy. And the reality is we’ve known this for years. Starving China of AI chips, external funding, semiconductor and blacklisting their firms won’t slow down the inevitable - if China is indeed a more capable innovator.

OpenAI Might Be Hurting American Innovation

When Microsoft decided to gamble on OpenAI they created a siphoning of revenue from the real Generative ecosystem by picking the winner. OpenAI’s annualized revenue has doubled from $1.6 billion in late 2023 to $3.4 billion but at what expense to the Generative AI ecosystem in America? It means the majority of thers frontier model startups don’t have a path to revenue generation or profitability including Mistral, Cohere, Aleph Alpha, AI21 Labs and the bulk of Generative AI startups that are AI tools in the U.S.

OpenAI being a closed-sourced model hampers its peers more than it helps. As it’s being overtaken by Anthropic, there’s already a big disconnect.

OpenAI CTO Mira Murati has previously stated the next generation model, widely suspected to be called GPT-5, will be as intelligent as someone with a doctorate across a broad range of topics, but that we’re unlikely to see that next model until sometime next year, in 2025. What will China’s capability in LLMs and apps and products even be by then?

AI Investments in Generative AI are Costly

The splurge in AI chips and datacenters by BigTech might be a spectacular failure if Generative AI doesn’t hit its lofty expectations.

Microsoft, Meta, Tesla, Google, Apple and Amazon are all guilty of spending huge amounts before real revenue has even been seen. The law of mean reversion says this might end in tears.

Hype and Reality have been Disconnected

Musk has said publically that the “total amount of sentient compute” — a concept that may refer to AI thinking and acting independently — will exceed all humans in five years. If that were true, OpenAI would be in a brilliant position.

Generative AI is more a Tactic to Spur Cloud Growth in America

For Microsoft, Google or AWS of course the real play is the race for Cloud computing revenue, growth and supremacy. Generative AI is just a means to an end in this case, with declining margins, cost cutting and ruthless layoffs at these companies.

The real revenue is coming from the Cloud, not from B2C or Enterprise AI related software directly.

Generative AI will shake up Education and Finance in 2024-2027

While real revenue might not be seen for ROI, that’s not to say that Generative AI is not impacting the world. Some impacts already highlighted include:

Science

Education

Finance

Real Estate

Entertainment

Healthcare

Retail

Manufacturing

Services

But I’d wager far less radical that have been narrated by the so-called leaders of the Techno-Optimism movement and BigTech with their lobbying, PR machine and constantly wagers for our attention.

Jim Covello: My main concern is that the substantial cost to develop and run AI technology means that AI applications must solve extremely complex and important problems for enterprises to earn an appropriate return on investment (ROI). We estimate that the AI infrastructure buildout will cost over $1tn in the next several years alone, which includes spending on data centers, utilities, and applications. So, the crucial question is: What $1tn problem will AI solve?

Truthfully, it doesn’t solve much but it does create many new problems on the internet.

Gen AI has lowered the public’s trust in AI systems and in BigTech.

GenAI is not convincing Enterprise customers that they require this technology immediately without doing their due diligence.

Though some companies are making layoffs where AI seems to be implicated.

Financial software company Intuit is laying off 1,800 workers across North America, many in San Diego, as it plans to invest more in artificial intelligence. At the same time, the company said it plans to hire more workers next year that will bolster its AI-driven strategy.

If Generative AI was such a great technology, why is this period of an AI bull-market coinciding with cost management, layoffs and weak adoption of Generative AI and a lack of corresponding revenue generation? Something is not adding up here.

The truth is Jim Covello is not unique, it’s a position many of us have been holding the entire time.

Skepticism is rational in such a world where BigTech tries to hype up the next thing, which is rarely the next thing. OpenAI’s antics and Sam Altman’s ethics have been laid bare now 19 months after ChatGPT for everyone to see.

Microsoft’s greed has been completely exposed even as they continue doing layoffs. In case you haven’t noticed, it’s pretty messed up.