Yann LeCun's Paper on creating autonomous machines

A Path Towards Autonomous Machine Intelligence in Review

Hey Guys,

I’ve been following Yann LeCun a lot more closely lately. It’s a real culmination of Yann’s thinking and it’s emerged recently in a PDF you can read here.

In the quest for human-level intelligent AI, Meta is betting on self-supervised learning. LeCun must be thinking about this legacy since his ideas very much are in favor of working towards HLAI and abandoning our pride and hype in the pursuit of AGI.

This week I’ve written no less than four times about this topic, it’s because I think it’s important. The PDF itself is 62 pages. So why is it important?

Please refer to the Slides of the Baidu talk here. It’s a good way to listen to the YouTube. I recommend you to listen to the video, if you think this topic might be for you.

Yann LeCun, Chief AI Scientist at Meta, published a blueprint for creating “autonomous machine intelligence” and I think summaries like this are worthwhile because they point to a path forward.

“We want to build intelligent machines that learn like animals and humans.” - Yann LeCun

We’ve been studying how human babies learn for decades and eventually we’ll integrate it into smart machines.

Advertising Dollars Could Fuel the Future of A.I.

If Yann is right, Meta AI will evolve a new kind of artificial intelligence as they build and work on the Metaverse with $Billions of dollars funded by their advertising based business model that Facebook has perfected over the last nearly the last 20 years!

The Metaverse could on the other hand prove a spectacular failure for Facebook and Mark Zuckerberg’s dream of domination in VR. Either way, some important R&D may take place due to the attempt.

Can SSL Scale new AI Systems?

Is this an important moment in the history of AI? This June, 2022 series of papers from Meta, the company formerly known as Facebook, is about and on a type of self-supervised learning (SSL) for AI systems. SSL stands in contrast to supervised learning, in which an AI system learns from a labeled data set (the labels serve as the teacher who provides the correct answers when the AI system checks its work).

The Comments on his PDF outline are interesting with even the comments on Less Wrong as pretty underwhelming.

If this was a serious OpenReview.net (not arXiv for now) call to the community, so that people can post reviews, comments, and critiques - why is it not getting much actual attention and real critiques? Do we maybe not trust Meta so much in 2022?

Or maybe it’s the odd arrogance of the tone of Yann LeCun.

I cannot say, since I don’t fully understand the importance of the Grandfathers of deep learning and so forth. I do find it somewhat peculiar.

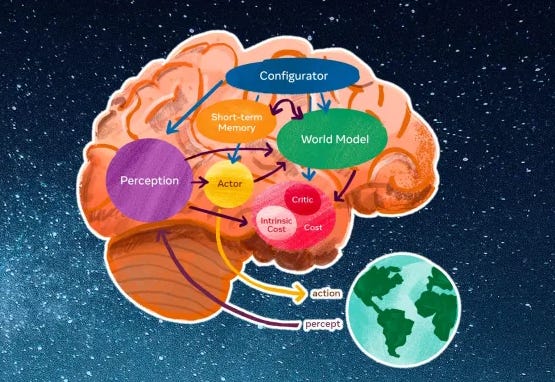

This position paper proposes an architecture and training paradigms with which to construct autonomous intelligent agents. It combines concepts such as configurable predictive world model, behavior driven through intrinsic motivation, and hierarchical joint embedding architectures trained with self-supervised learning.

Configurable predictive world model

Behavior driven through intrinsic motivation

Hierarchal join embedding architectures

Training with self-supervised learning

But is A.I. making real headway in the early 2020s?

Inspired by Cognitive Science, Neuroscience, Robotics and Machine Learning

LeCun has compiled his ideas in a paper that draws inspiration from progress in machine learning, robotics, neuroscience and cognitive science. He lays out a roadmap for creating AI that can model and understand the world, reason and plan to do tasks on different timescales.

Machine Learning

Robotics

Neuroscience

Cognitive Science

Is Human-Level A.I. Within Sight?

Can a unified theory of A.I. towards learning Autonomous Machine Intelligence take place in the 2020s?

While the paper is not a scholarly document, it provides a very interesting framework for thinking about the different pieces needed to replicate animal and human intelligence. It is clearly meant to be more than Yann LeCun tooting his own accolades, we know the guy is smart.

LeCun has often spoken about his strong belief that SSL is a necessary prerequisite for AI systems that can build “world models” and can therefore begin to gain humanlike faculties such as reason, common sense, and the ability to transfer skills and knowledge from one context to another.

MAE

The new papers show how a self-supervised system called a masked auto-encoder (MAE) learned to reconstruct images, video, and even audio from very patchy and incomplete data. While MAEs are not a new idea, Meta has extended the work to new domains.

LeCun has been thinking and talking about self-supervised and unsupervised learning for years. But as his research and the fields of AI and neuroscience have progressed, his vision has converged around several promising concepts and trends.

World models are at the heart of efficient learning

How could machines learn as efficiently as humans and animals? How could machines learn to reason and plan? How could machines learn representations of percepts and action plans at multiple levels of abstraction, enabling them to reason, predict, and plan at multiple time horizons?

Among the known limits of deep learning is need for massive training data and lack of robustness in dealing with novel situations. The latter is referred to as “out-of-distribution generalization” or sensitivity to “edge cases.”

Those are problems that humans and animals learn to solve very early in their lives.

A modular structure

One element of LeCun’s vision is a modular structure of different components inspired by various parts of the brain.

“The essence of intelligence is learning to predict,” LeCun says. And while he’s not claiming that Meta’s MAE system is anything close to an artificial general intelligence, he sees it as an important step.

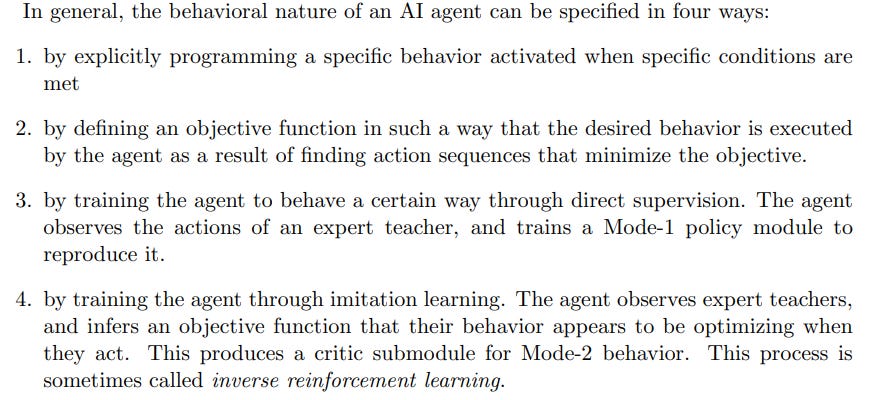

Supervised vs. Unsupervised

First, supervised ML models require enormous human effort to label training examples. And second, supervised ML models can’t improve themselves because they need outside help to annotate new training examples.

In contrast, self-supervised ML models learn by observing the world, discerning patterns, making predictions (and sometimes acting and making interventions), and updating their knowledge based on how their predictions match the outcomes they see in the world. It is like a supervised learning system that does its own data annotation.

While the architecture that LeCun proposes is interesting, implementing it poses several big challenges. Among them is training all the modules to perform their tasks. While it’s elegant to think about, it’s not overtly clear how Meta plans to bring this into action or manifest it.

In his paper, LeCun proposes the “Joint Embedding Predictive Architecture” (JEPA), a model that uses EBM to capture dependencies between different observations. EMB stands for energy based models.

In recent years, self-supervised learning has found its way into several areas of ML, including large language models.

“A considerable advantage of JEPA is that it can choose to ignore the details that are not easily predictable,” LeCun writes.

By figuring out how to predict missing data, either in a static image or a video or audio sequence, the MAE system must be constructing a world model, LeCun says. MAE stands for masked auto-encoder.

LeCun clearly states that current proposals for reaching human-level AI will not work. I can only hope Meta has the answer. by scaling transformer models with more layers and parameters and training them on bigger datasets, we’ll eventually reach artificial general intelligence.

LeCun does not believe scaling transformer models with more layers and parameters and training them on bigger datasets eventually can reach AGI where he argues instead that LLMs and transformers work as long as they are trained on discrete values.

Not everyone agrees that the Meta researchers are on the right path to human-level intelligence. Another theory is “reward is enough,” proposed by scientists at DeepMind.

Anyways guys for further reading you can go here, or here, I’ll be unpacking this paper in more details hopefully in the days and weeks to come.

Thanks for reading! If you want to support me and my writing and get access to premium content you know what to do for the price of a cheap cup of coffee.