OpenAI DevDay Highlights

November 6th, 2023 event tries to drum up excitement about OpenAI again. The Apple of AGI?

Hey Everyone,

For the developers and AI enthusiasts in the room, this 1st ever DevDay by OpenAI was quite a spectacle.

So OpenAI’s big day came and went. This is just a highly skimmable version in case you want a brief summary.

Poll

🚨 Major new models and features release alert from OpenAI including new GPT4 Turbo model, lower prices, new Whisper and TTS model, inbuilt json support and more! 👇

Here are the new models and products:

🔹GPT-4 with 128k context window (300 pages in a single prompt).

🔹GPT-4 Turbo can accept images as inputs.

🔹Update on Speech to Text model.

🔹New Text-to-speech capability.

🔹GPT-4 finetuning.

🔹DALL·E 3 API.

🔹Lower price.

What’s New?

You can try the Assistants API beta without writing any code by heading to the Assistants playground.

New models and developer products announced at DevDay

OpenAI claims 2 million devs work on their product.

OpenAI claims 100 million “weekly users” of ChatGPT

Video of Opening Keynote

Where are all the Devs?

Curiously the live-stream on YouTube was only watched live by less than 150k people. Not sure where all these “millions” of users are. Sure, some watched it on their website. Still, not the numbers you’d expect from a so-called viral product at the bleeding edge of Generative AI models and experience?

In a Nutshell

GPT-4 Turbo, currently in preview, will be three times cheaper for developers.

GPT-4 Turbo 128k

(1) GPT-4 Turbo 128k

OpenAI releases a new model that is GPT-4 level performance, but cheaper and faster. It has a 128k context window (300 pages book). Claims it is even accurate in longer context conversations.

(2) Substantial pricing reductions

The new GPT-4 Turbo will cost 2.75x cheaper on average, opening new opportunities and business models that didn’t make sense before.

GPT-3.5 Turbo 16k is also getting a price cut - 3x less than the previous price.

(3) New modalities

OpenAI releases its first text2Speech model, directly competing with the likes of ElevenLabs and PlayHT.

It also opens API access to DALL-E 3, GPT-4 (Turbo and Vision), and this new Text2Speech model.

Lastly, OpenAI will release a new Whisper V3.

(4) Better world knowledge

GPT’s knowledge cutoff was updated to April 2023 and will continue to improve over time.

Sam also hinted at allowing users to upload their own data, directly competing with RAG providers.

(5) More control

Improvements to function calling (better and support multiple functions in one call) and a special JSON mode for LLM builders using JSONs.

Also, users can now use a seed parameter to ensure the same prompt yields the same output (great to reduce hallucinations!).

(6) Customization

Users will be able to fine-tune GPT-4, just a few months after GPT-3.5 fine-tuning was released.

OpenAI will also work with customers in a Palantir-like model, landing its own researchers and engineers to work with companies to build well-crafted models, from preprocessing to training and deployment.

(7) Higher rate limits

2x tokens per minute with the ability to ask for rate increases via the account management interface.

(8) Copyright shield

Same as Google and Microsoft, OpenAI will defend any copyright infringement claims against its users.

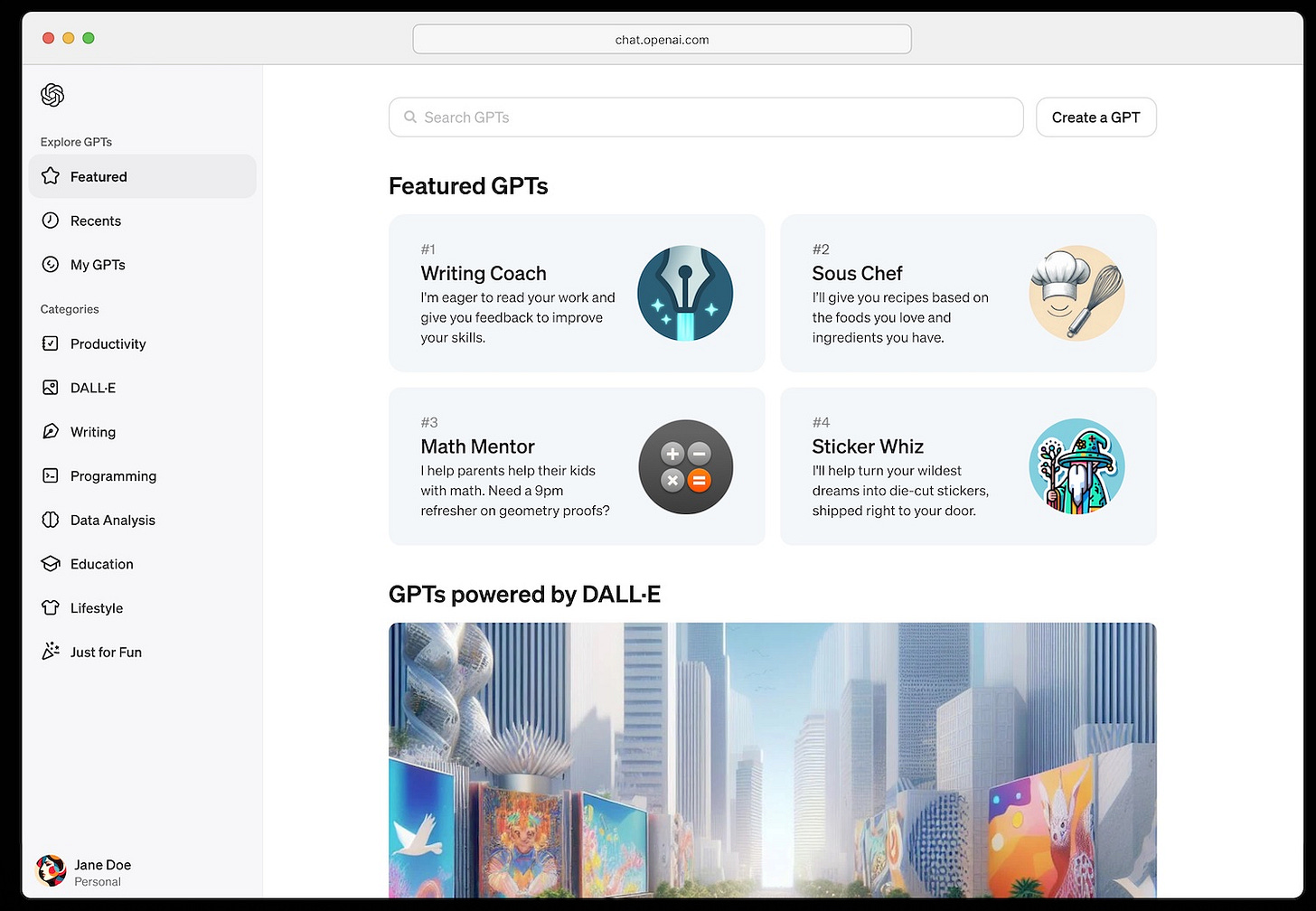

OpenAI is launching a GPT store later this month.

The store will let users share and sell their custom GPT bots. Sam Altman says OpenAI is going to “pay people who build the most useful and the most used GPTs” a portion of the company’s revenue.

OpenAI Tries to Build Momentum on ChatGPT Hyped Year

Altman also shared today that over two million developers use the platform, including more than 92 percent of Fortune 500 companies.

The company announced that DALL-E 3, OpenAI’s text-to-image model, is now available via an API after first coming to ChatGPT and Bing Chat.

OpenAI is launching a new API, called the Assistants API, that will help developers build “agent-like experiences” within their apps.

GPTs can be made with no coding experience, and can be as simple or complex as you like, as they explained.

What are GPTs by OpenAI?

The company said they are rolling out custom versions of ChatGPT that you can create for a specific purpose—called GPTs. GPTs are a new way for anyone to create a tailored version of ChatGPT to be more helpful in their daily life, at specific tasks, at work, or at home—and then share that creation with others.

OpenAI claimed 460 companies on the Fortune 500 companies are using its products.

GPTs will be available to paying ChatGPT Plus subscribers and OpenAI enterprise customers, who can make internal-only GPTs for their employees.

GPTs are perhaps the most intriguing new feature they announced for me. We know that Character.AI has been very successful especially with GenZ and younger users.

Get more details of the below here.

Function calling updates

Function calling lets you describe functions of your app or external APIs to models, and have the model intelligently choose to output a JSON object containing arguments to call those functions.

Improved instruction following and JSON mode

GPT-4 Turbo performs better than our previous models on tasks that require the careful following of instructions, such as generating specific formats (e.g., “always respond in XML”). It also supports our new JSON mode, which ensures the model will respond with valid JSON.

Reproducible outputs and log probabilities

The new seed parameter enables reproducible outputs by making the model return consistent completions most of the time.

Learn more about X.AI’s Grok chatbot here.

Assistants API, Retrieval, and Code Interpreter

An assistant is a purpose-built AI that has specific instructions, leverages extra knowledge, and can call models and tools to perform tasks. The new Assistants API provides new capabilities such as Code Interpreter and Retrieval as well as function calling to handle a lot of the heavy lifting that you previously had to do yourself and enable you to build high-quality AI apps.

Assistants also have access to call new tools as needed, including:

Code Interpreter: writes and runs Python code in a sandboxed execution environment, and can generate graphs and charts, and process files with diverse data and formatting. It allows your assistants to run code iteratively to solve challenging code and math problems, and more.

Retrieval: augments the assistant with knowledge from outside our models, such as proprietary domain data, product information or documents provided by your users. This means you don’t need to compute and store embeddings for your documents, or implement chunking and search algorithms. The Assistants API optimizes what retrieval technique to use based on our experience building knowledge retrieval in ChatGPT.

Function calling: enables assistants to invoke functions you define and incorporate the function response in their messages.

Longer Context Windows

GPT-4 Turbo will offer a longer context window that can handle 300 pages of information.

Will OpenAI be the Apple of GPTs and LLMs?

The App Store model has proven unbelievably lucrative for Apple where they also plan to take a 30% cut, so it should come as no surprise that OpenAI is attempting to replicate it here, literally cloning it, but does it make sense? Not only will GPTs be hosted and developed on OpenAI platforms, but they will also be promoted and evaluated.

Certainly this will lead to a lot of products built on top of OpenAI that may or may not have much longevity.

It definitely will have a high grifting-factor a la Sammy:

“We’re going to pay people who make the most used and most useful GPTs with a portion of our revenue,” and they’re “excited to share more information soon,” Altman said.”

It’s difficult to imagine this taking off in the way OpenAI hopes.

OpenAI is frantically trying to build a business model that has an in-built moat to its first mover advantage, even as other similar companies gain more funding.

OpenAI has done heavy marketing that it’s an AGI company, though Sam Altman claims ChatGPT went viral by word of mouth alone. If Twitter bots and paid influencers are word of mouth, I guess that means that Generative A.I. will save the world as well!

Still the concept of GPTs, a Store and Turbo are an impressive trinity of announcements. Which we mostly already knew about.

GPT-4 Turbo will also “see” more data, with a 128K context window, which OpenAI says is “equivalent to more than 300 pages of text in a single prompt.”

The event was extremely commercial and sort of making OpenAI seem to be more mainstream than they are. As far as the public knows, they are one year old company, but have been around since December, 2015.

OpenAI says it will be monitoring activity of GPTs to block things like fraud, hate speech, and “adult themes.” As TechCrunch notes, it’s not clear at this point whether there will be the ability to simply charge for your GPT, or whether it will be strictly revenue sharing.

Since Microsoft makes 75% of OpenAI’s revenue until their deal is paid off, Microsoft appears to be giving OpenAI the front seat, while Microsoft are the puppet masters from the back room.

OpenAI has a facade of being very privacy and ethics conscious. When the GPT Store launches down the road, OpenAI will only accept agents from people who have verified their identity. Initially, GPTs will be accessible through shareable web links. Still for me personally I don’t find Sam Altman a very trustworthy source. The “grift” factor is really high here.

The company still has that AGI method to its corporate madness of close-sourced commercialization of LLMs. Calmly reminding us that ultimately, OpenAI sees its GPT platform as bringing it one step closer to its main goal: the creation of an AI superintelligence, or AGI.

View OpenAI’s Tweet about GPTs. - Go to X post.

https://twitter.com/OpenAI/status/1721594380669342171

3 Main Takeaways

1. Their new model GPT-4 Turbo supports 128K context and has fresher knowledge than GPT-4. Its input and output tokens are respectively 3× and 2× less expensive than GPT-4. It’s available now to all developers in preview.

2. Assistants API and new tools (Retrieval, Code Interpreter) will help developers build world-class AI assistants within their own apps.

3. The platform is becoming multimodal. GPT-4 Turbo with Vision, DALL·E 3, and text-to-speech are all now available to developers.

Kudos to OpenAI for trying so hard.

That’s all I got.